Tech Topic | June 2019 Hearing Review

The number-one desire of people with hearing loss is not to make sound louder; it’s to hear well in noise. Improving the signal-to-noise ratio in hearing aids has evolved rapidly in the last several years. The evolution now continues with new viable and pragmatic combinations of hearing aids, Internet, smartphones, multiple wireless protocols, GPS, and more, opening up entirely new avenues for truly personalized hearing care.

For people with normal hearing and listening ability, it may be surprising to learn that the chief complaint of the approximately 37 million people in the United States with audiometric sensorineural hearing loss (SNHL) is not that they want or need sounds louder. Indeed, the chief complaint among people with SNHL is their inability to understand speech-in-noise (SIN). Further, an additional 26 million people who have no audiometric hearing loss (ie, they have thresholds within normal limits) also have hearing difficulty and/or SIN difficulty.1 Therefore, beyond simply making sounds louder, people with SNHL, hearing difficulty, and people with SIN problems need devices which improve the ability of the listener to better understand SIN.

As hearing aids, wireless protocols, apps, and technology continue to improve, future-based hearing aids will be better able to help people hear and listen in noise. Specifically, substantial and dramatic advances, which apply synergistic benefits from the Internet, smartphones, multiple wireless protocols, GPS, and more, are being applied to sophisticated hearing aids to allow the hearing aid wearer to better understand speech-in-noise.

A Philosophical Approach to Improving Speech in Noise

The single most important thing hearing care professionals can do for the person with SNHL—to improve their ability to understand SIN—is to facilitate an improved signal-to-noise ratio (SNR). Adaptive, non-adaptive, and beamforming directional microphones, as well as various noise reduction protocols and algorithms, have been incorporated into hearing aids to improve the SNR. Many of these approaches have been proven to be very useful. However, these protocols and algorithms generally provide an improved SNR of up to about 3 or 4 dB in realistic acoustic environments, when applied through open-canal fittings, when speech babble surrounds the listener, as happens in restaurants, weddings, airports, and similarly challenging acoustic environments.

Fortunately, tools such as FM systems and digital remote microphones wirelessly connect the person speaking to the person listening. Telecoils and loop systems may be used to connect a speaker with many listeners. Of note, FM, RM, and telecoils all yield significantly reduced background noise and an improvement of 12-15 dB in SNR for those motivated and willing to use these technologies. Unfortunately, although these wireless transmitting devices offer unrivaled benefit, few adults receive demonstrations of the significant benefits available. Further, stigma, cost, and other issues may contribute to the lack of acceptance of wireless systems.

With regard to hearing aids, SIN, and improving the SNR, Beck & LeGoff 2 (2017) reported average improvements in SNR of 6.3 dB (based on 25 listeners with an average age 73 years) while using Multi-Speaker Access Technology (MSAT)3 availed through OpenSound Navigator (OSN) in Oticon’s Opn 1 hearing aids in challenging listening situations. In 2018, Ohlenforst and colleagues4 demonstrated that Oticon’s Opn 1 improves the ability of listeners to better understand speech-in-noise in difficult listening situations by 5 dB SNR. Beck et al5 recently reviewed many of the foundational publications and outcomes-oriented benefits related to the first generation of OSN and MSAT, showing how technology has evolved and improved the ability to understand SIN via improved SNRs over the last decade. Further, despite the early success of MSAT, the philosophical approach to improving the SNR has remained steadfast, while these technologies—as well as their speed and strength—have evolved and matured further through the release of Opn S.6

The evolution continues. In tandem with wireless and hearing aid solutions, another avenue to viable and pragmatic improvements for understanding SIN is the combination of hearing aids, Internet, smart phones, multiple wireless protocols, GPS, and more, which may anticipate the needs and intent of the hearing aid wearer.

Choice and Intent

The Framework for Understanding Effortful Listening (FUEL)7 presents listening effort as a function of difficulty of the listening situation and the motivation of the listener. When a hearing aid changes setting in a difficult situation, such as activating MSAT (see above), it reduces the listening effort the wearer needs to understand speech in noise. Of course, if the hearing aid was not “smart” and could not re-program itself, the wearer may be self-motivated enough to apply more effort and cope with more noise.

For example, on occasion, one may desire to place themselves in a noisy and crowded acoustic environment and simply hear everything—even if it requires additional and substantial effort to successfully listen. Participating in noisy environments is often a choice. However, if given the choice and the wearer’s intent in an acoustically challenging situation, the preference for preferred settings and less listening effort can be made available to (and through) sophisticated hearing aids.

Smartphones connected to hearing aids can (and already do) log acoustic characteristics of the sound environment and the wearer’s previous and preferred behavior. For example, listening preferences, such as loudness, directionality, noise reduction, and more can be applied to the current situation in real time.

It is likely, for many people and across many acoustic environments, the user’s intent can be “learned” from analyzing objective data (ie, preferred settings) based on previous exposure to similar noises. Furthermore, smart phones can log even more data, such as location and activity, which provide further insights into the wearer’s behavior (note, because privacy is not trivial and the hearing aid is capable of updating information about every 10 seconds, the use of location logging—or geotagging—is optional for the wearer.)

Time, Space, and the Adaptation Journey

In Denmark, we recently analyzed MSAT pilot data logged via smartphones fitted with various MSAT settings. We found a very interesting “adaptation journey” which typically started after a few weeks exploring different settings. Quite often a single preferred program emerged, which we considered their “personal adaptation journey.”

The personal adaptation journey was not always predictable. Some subjects chose a very active MSAT in quiet situations, and some chose a limited amount of MSAT in difficult and noisy situations. These unanticipated findings are examples of wearer intent. In certain circumstances, some wearers wanted to block out all disturbing sounds, while others chose to be immersed in higher levels of noise. We quickly learned that the acoustic preferences of the wearer were individual, often unique, and not predictable, although certainly group trends were evident.

Other “use patterns” emerged, too. For many subjects we noticed an increase in MSAT activation in the afternoon and evening, rather than in the morning. As such, we speculated that fatigue later in the day facilitated a greater desire—or perhaps a greater need—for advanced noise processing. Therefore, as technology and our fitting methods progress, perhaps the role of the Hearing Care Professional (HCP) will be include programming the hearing aid to adapt to the wearer’s needs across different time frames, and estimating the individual wearer’s needs to provide the optimal hearing aid settings in various acoustic situations.

Geotags and Hearing Aids

Geotags are assigned based on wirelessly transmitted radio signals which define latitude and longitudinal data, all of which is seamlessly received via your smartphone. This also applies whenever you “check-in” via social media (unless you disable the function in your Preferences). Geotags describe your location and a description of where you are, and the date, time, and other data can also be captured.

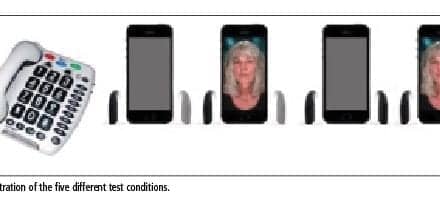

Figure 1. As with the photos on your smartphone, smart hearing aids can now geotag hearing locations/situations for more optimal programming and automatic listening responses.

What if your smartphone (which is already receiving geotags, and is already streaming information to your hearing aids) were to use the same geotag information to seamlessly and automatically instruct and reprogram the hearing aids, based on where you are? What if the geotag from the coffee shop was captured by the phone and sent to the hearing aids, instructing the hearing aids to reprogram, based on your intent and previous preferences? In other words, the phone essentially instructs the hearing aids, “Entering a coffee shop. Use narrow directionality and maximal noise reduction.”

In fact, smart hearing aids already respond to geotags using “If-This-Then-That” (IFTTT) recipes.8 While using IFTTT, the user defines an area with a virtual fence around it, and every time the wearer enters the area, IFTTT schedules an action for the hearing aid. Participants in the MSAT pilot studies in Denmark have used this to automatically transmit something like “Entering a busy food court, use narrow directionality and maximal noise reduction.” Of note, these interfaces and technology are already available in Oticon Opn products.

Applying Smarter Analysis for Improved Dialogue with the HCP

Analysis of usage data, dialogue with HCPs, and dialogue with people who wear hearing aids provided further vision into the first steps: It seems incredibly important to continue to develop even more sophisticated hearing aids (ie, “smart hearing aids”) which adapt to the wearer’s intent in real time, when desired and programmed to do so, as founded in the conversations and information exchange which occurs between the hearing aid wearer and the HCP. As we have previously discussed, it is clear the communication needs of the hearing aid wearer change across the day, depending on the people, the acoustic environment, the primary talker (gender, voice loudness, pitch, accent, etc), the background noise, the time of day, the tiredness of the hearing aid wearer, and more. Thus, a static hearing aid fitting seems unlikely to meet the needs of people listening and participating in dynamic acoustic environments.

Today, important dialogue between the hearing aid wearer and the HCP facilitates the personalization of a hearing aid fitting. In the near future, the analysis of logged data may be used to augment that dialogue. For example, the HCP-patient dialogue might include data-based questions, such as “You seem to prefer more help from your hearing aids later in the day, perhaps around dinner time. Would you like the hearing aids to provide a little more help at that time, or perhaps earlier?” In other words, the data may provide a starting point and insight for a dialogue which combines the wearer’s experience and expectations.

Historically, audiology-based research has addressed speech in noise as a relatively fixed acoustic scene. That is, speech has been sometimes presented in a noisy background in which the hearing aid wearers task has been to repeat words or comment on their ability to understand speech in noise. While this approach has doubtlessly helped us understand and improve our patient’s communication, it is not typically revealing for in-depth discussion of individual sound scenes, nor does it account for how individual needs and preferences change throughout the day. As such, datalogging, geotagging, and use patterns allow greater coverage and analysis of the wearer’s unique-use scenarios. Moreover, they also allow the wearer, with assistance from the HCP, to learn much more about how to cope with hearing loss and benefit from hearing aids throughout their day.

Douglas L. Beck, AuD, is Executive Director of Academic Sciences at Oticon Inc, in Somerset, NJ, and Niels Henrik Pontoppidan, PhD, is Research Area Manager, Augmented Hearing, at the Oticon A/S Eriksholm Research Center in Snekkersten, Denmark.

Correspondence can be addressed to Dr Beck at: [email protected]

Citation for this article: Beck DL, Pontoppidan NH. Predicting the intent of a hearing aid wearer. Hearing Review. 2019;26(6):20-21.

References

-

Beck DL, Danhauer JL, Abrams HB, et al. Audiologic considerations for people with normal hearing sensitivity yet hearing difficulty and/or speech-in-noise problems. Hearing Review. 2018;25(10):28-38.

-

Beck DL, LeGoff N. Speech-in-noise test results for Oticon Opn. Hearing Review. 2017;24(9):26-30.

-

Beck DL, Le Goff N. A paradigm shift in hearing aid technology. Hearing Review. 2016;23(6):18-20.

-

Ohlenforst B, Wendt D, Kramer SE, Naylor G, Zekveld AA, Lunner T. Impact of SNR, masker type and noise reduction processing on sentence recognition performance and listening effort as indicated by the pupil dilation response. Hear Res. 2018;365:90-99.

-

Beck DL, Ng E, Jensen JJ. A scoping review 2019: OpenSound Navigator. Hearing Review. 2019;26(2):28-31.

-

Beck DL, Callaway SL. Breakthroughs in signal processing and feedback reduction lead to better speech understanding. Hearing Review. 2019;26(4):30-31.

-

Pichora-Fuller MK, Kramer SE, Eckert MA, et al. Hearing impairment and cognitive energy: The Framework for Understanding Effortful Listening (FUEL). Ear Hear. 2016;37(1):5S-27S.

-

Beck DL, Nelson T, Porsbo M. Connecting smart hearing aids to the internet via IFTTT. Hearing Review. 2017;24(2):36-37.