|

|

| Larry Revit, MA, is president of Revitronix, Braintree, Vt, and is an audio engineer, hearing industry consultant, and musician. |

Personal and technical observations related to hearing aids and the performance of music—from the point of view of a musician with a severe hearing impairment. Although modern digital hearing aids can do a fairly good job of helping a hearing-impaired person enjoy listening to music, my experience is that the needs of performing musicians are not often met by today’s hearing aids. Here’s why.

You’ve probably heard this song before: “Music is my life.” Certainly, there are other vital aspects to my life, but here’s the way it is for me: Unless I regularly engage in the practice and performance of music, my enjoyment of living seems to decline rapidly. That makes music very special to me, personally.

This two-part essay is about my personal and technical observations related to hearing aids and the performance of music—from the point of view of a musician with a severe hearing impairment. In a nutshell, although conventional (modern digital) hearing aids can do a fairly good job of helping a hearing-impaired person enjoy listening to music, my experience is that the needs of performing musicians are not often met by today’s hearing aids. The act of performing music for an audience can be a joy to a musician who can hear well during a performance. However, to a musician who can’t hear well on stage, the experience can be frustrating, if not disastrous.

To complement Marshall Chasin’s article in this issue and elsewhere,1,2 here are a few other things about music that make it different from speech.

Frequencies of Importance

|

| FIGURE 1. From Mueller & Killion3 Count-the-Dots Audiogram for calculating the Articulation Index. Each dot represents 1% of the importance for speech intelligibility. Reprinted with the permission of The Hearing Journal and its publisher, Lippincott Williams & Wilkins. |

With speech, the most important spectral range is above 1000 Hz, as illustrated in the Mueller and Killion3 “count the dots” audiogram (Figure 1). The dots represent the importance of various frequencies, at various hearing levels, for speech intelligibility. A total of 74 of the 100 dots are located at or above 1000 Hz, meaning essentially that the frequencies at or above 1000 Hz contribute 74% of the importance of a speech signal for intelligibility. It’s not surprising, therefore, that the bulk of attention in hearing aid fitting programs is often given to fine-tuning the frequency response at or above 1000 Hz (Figure 2).

With music, somewhat the opposite is true. Although the highest orchestral pitches may reach into the 4-5 kHz range,4,5 the overwhelming majority of the pitches of music exist in the lower half of the auditory spectrum—that is, with corresponding fundamental frequencies at approximately 1000 Hz and below.

For example, 63 of the notes (about 72%) of an 88-key piano keyboard have pitches whose fundamental frequencies are below 1000 Hz (Figure 3). With the human singing voice, almost all of the pitches have fundamental frequencies below 1000 Hz. The highest pitch, designated “soprano” (C6), has a corresponding fundamental frequency of 1046.5 Hz.4 Only a “sopranino” can sing higher.6

|

| FIGURE 2. Two examples of typical fine-tuning screens from hearing aid fitting programs. Most of the adjustable frequencies are higher than 1000 Hz. |

At the low-pitch end of things, Figure 3 indicates that the lowest normal note of a guitar has a fundamental frequency of 82.4 Hz, and the lowest note of a double bass has a fundamental frequency of 41.2 Hz (not to mention the 30.9 Hz low-B of today’s five-string electric basses). Granted, for these low notes, the pitch can be heard even if the fundamental is missing.5 However, the fundamental is often important for hearing the balance of one note against another, as well as for hearing the natural warmth and fullness of low-pitch notes.

Implications for the Programming of Hearing Aids for Music

A musician needs to be able to hear all of the musical pitches in precise balance. If a group of notes are to sound equally loud to the listener, the player needs to hear those notes as sounding equally loud. It’s important to keep in mind that every octave has 12 notes. There are just as many notes, for example, between 100 and 200 Hz as there are between 1000 and 2000 Hz. So, one may ask, why do hearing aid fitting programs (if they are to apply to music) usually have many more adjustable-gain bands above 1000 Hz, rather than below (eg, compare Figure 2 with Figure 3)? Why not have equal adjustability in every pitch region?

|

| FIGURE 3. An 88-key piano keyboard. At bottom are fundamental pitch frequencies. Above the keyboard are pitches of interest. A total of 63 of the 88 pitches have fundamental frequencies below 1000 Hz. Compare this to the hearing-aid fitting software of Figure 2, where most of the adjustable frequencies are above 1000 Hz. |

Fixing resonances. It’s not uncommon for hearing aids (especially when vented or undamped) to have unwanted resonances or anti-resonances (peaks or dips in the frequency response) in the range of the musical pitches. Thus, one or more notes may either jump out or become buried. If there is, for example, one note that jumps out in the octave surrounding 500 Hz, the only available “solution” may be to reduce the gain in that entire octave band, which would reduce the volume of all 12 notes in that range—hardly a solution.

One hearing-impaired musician (a professional bass player and music engineer, who contributed an essay that is available online) has proposed that it would be very helpful to have two narrowband filter controls with adjustable attenuation and with adjustable center frequencies in the 125-250 and 250-500 Hz ranges (Rick Ledbetter, personal communication). I’d throw in my own two cents (as a guitar player and music engineer) to ask for adjustable-frequency, adjustable-bandwidth gain controls available to function as high as the 1500 Hz region, or above. Other musicians may have other ranges where they might desire control of gain in precise bandwidths.

The sound engineering world has a useful solution: the “parametric equalizer,” which lets the user select not only the gain and center frequency but also the bandwidth. It seems reasonable to surmise that the addition of such adjustable-frequency, adjustable bandwidth gain controls to hearing aid fitting programs would also be potentially useful for ironing out resonances affecting speech, as well as music.

What about higher frequencies; aren’t they also important for music? Oh yes, indeed! The sustained notes and percussion of music may be considered correlates of the vowels and consonants of speech. With speech, vocal tract resonances (formants), which occur at frequencies well above the fundamental (pitch) frequency of a voice, help the listener to distinguish one vowel from another.7 Similarly, resonances occurring above the fundamental (pitch) frequency of musical notes help the listener to distinguish the sound of one instrument from another.

In speech, the frequencies of the formant resonances are determined largely by manipulations of the volume spaces and air channels—the Helmholtz, or “bottle-shaped,” resonators—of the vocal tract.8 In musical instruments, the resonances are usually determined by fixed geometric properties of the instrument (tubing, string, and pipe lengths, etc), creating emphasis at one, two, or even several of the upper harmonics (integer multiples of the pitch frequency) of a given note.

Generally, the highest important vowel formant, F3, can be centered as high as about 3000 Hz. Instrumental harmonic resonances may occur in that same range, but they often extend much higher. For example, the violin (which is very rich in harmonics), often has significant harmonics above 5000 Hz, and the highest notes of a harmonica can even have significant harmonics as high as 10,000 Hz.4

Beyond Notes and Harmonics

|

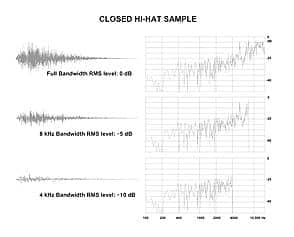

| FIGURE 4. Waveforms (left) and spectrum analyses (right) of three versions of the same strike of a closed hi-hat. Upper sample is unaltered. Middle sample was brickwall low-pass filtered at 8 kHz. Bottom sample was brickwall low-pass filtered at 4 kHz. Note: The spectrum analyses were obtained by the Fast Fourier Transform (FFT) method, which displays dB per unit Hz. A 1/3-octave band analysis, which displays dB per 1/3-octave band, better represents how we perceive the relative energy in various frequency ranges. To simulate what a 1/3-octave band analysis would show, the FFT spectra were tilted upward using a broadband filter with a rising slope of 3 dB per octave.] |

Music engineers generally know that the frequencies above 4 kHz are responsible for the “sizzle of cymbals” or the “shimmer of violins.” I call the 4-8 kHz band the “hi-hat band,” because having sufficient gain in that band, to me, means the difference between hearing and not hearing a hi-hat in the context of an instrumental mix.

Figure 4 illustrates this point. On the upper left, for reference purposes, is the waveform of a single strike of a closed hi-hat. I arbitrarily assigned an RMS level of 0 dB to this reference condition. The graph at the upper right is a spectrum analysis of this full-bandwidth, reference hi-hat waveform. Note the generally rising response, with visible resonances at about 5500 and 8000 Hz. For the center-left waveform, the reference waveform was filtered using a brickwall low-pass filter having a cutoff at 8000 Hz. Note that removing the energy above 8000 Hz has lowered the RMS level by 5 dB. However, the resonances at 5500 and 8000 are still noticeable in the spectrum to the right. For the lower waveform, the reference waveform was filtered using a brickwall low-pass filter having a cutoff at 4000 Hz. Here the RMS level is reduced 10 dB from the reference level, and the high-frequency resonances are now completely missing. Although the lower waveform in isolation (if loud enough to be audible) may present a sound identifiable as a hi-hat (owing to the temporal envelope and remnant spectral characteristics), the masking elements of an instrumental mix, along with the drastically reduced level and bandwidth, render the hi-hat inaudible in my experience.

So … What Should a “Music Program” Look Like?

Ready? The “music program” should have two embodiments: one in the fitting software and one in the hearing aid. My comments will apply to the former and be limited to what I’ve discussed above.

Inserting appropriate parametric equalizers. Because most of the pitches of musical notes fall at or below 1000 Hz, sufficient adjustability should be available for fine tuning—essentially balancing—those pitches. Also, as instrumental resonances occur at higher frequencies, fine tuning may also be required for smooth responses in the upper frequencies. Making available several adjustable-frequency, adjustable-bandwidth gain controls (parametric equalizers) may present a solution.

Certainly, “keeping it simple” is an important goal to apply to fitting software, and adding several parametric equalizers may tend to defeat that purpose. However, restricting the visibility of the extra controls, until selected from a menu by the fitter, would make the required additional flexibility available while not complicating the look of the screen when that flexibility is not called for.

Extending high-frequency gain without feedback. Any hearing aid fitter knows that the requirement of providing sufficient gain in the “hi-hat band” (above 4 kHz) can easily increase susceptibility to feedback. In my experience, a solution is to use an earpiece with a “foam-assisted” sealing method. In one hearing aid system I have, terminating the earpiece bores with a Comply™ Snap Tips does the trick. With another, I use either Comply™ Soft Wraps or Etymotic Research E-A-R Ring™ Seals to get up to about 30 dB of (coupler) gain at 8 kHz without feedback.

Personal Observations: Performing Onstage with Amplified Instruments

It may seem obvious, but a musician performing onstage has to hear not only what they are playing, but also what is being played by the other musicians who are creating the music. Pitch, rhythm, and timbre (tonal balance) are all important, but the overriding consideration is that the musician, ultimately, is responsible for presenting the correct balance and blend of sounds to the audience. With major “rock” or “pop” concerts, usually every instrument is miked and fed to separate “house” and “stage monitor” sound systems. Typically, there are separate sound engineers—one to mix for the house and one to mix for the stage. Often, there are even separate stage monitor mixes available for each performer or group of performers onstage. In these cases, the performing musician relies on (trusts) the house engineer to present a correct balance to the audience. And, assuming the stage monitors are also correctly mixed by the monitor engineer, the musician need only be able to hear the stage monitor system well—and play well—to fulfill one’s performing responsibilities.

|

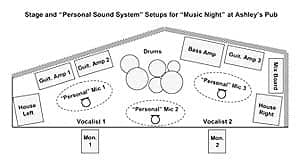

| FIGURE 5. Typical setup for “Music Night” (open stage) at a club venue. The author typically plays electric guitar and stands near Guitar Amp 1 (left), but cannot hear Guitar Amp 3. “Personal” mics are part of a “Personal Sound System” solution described in the text and diagramed in Figure 6. |

However, in the majority of performances at “small club”-type venues, where in fact most musicians play, it may be only the vocals that are fed to the house sound system. There may even be no stage monitor system at all. In such cases, players with normal hearing can often get by, even though doing so may present something of a challenge. But for a musician with impaired hearing, such conditions present a struggle.

Figure 5 shows the typical stage setup at the biweekly “Ashley’s Music Night,” an “invitational open stage” where I perform at a local watering hole in nearby Randolph, Vt. Most of the players have “day gigs,” but currently or previously have played in bands professionally. I usually play electric guitar at amp position 1 (audience left), but sometimes I’m at position 3 (audience right), or I play electric bass (near the drums). Here’s my typical experience:

At the beginning of the night, things may start out at reasonable levels, say 90 dBSPL or so. I will usually be wearing both of my hearing aids (currently a different model for each ear) and can hear pretty much everything at these early moments, perhaps with the exception of the guitar amp on the opposite side of the stage. The amp at that location is usually not loud enough for me to hear comfortably “from all the way over there”—particularly because the amp’s speakers are directed toward the audience, and not toward my end of the stage.

Inevitably, before long, the overall levels creep up to about 100 dB SPL (especially owing to the electric bass), and my left hearing aid begins to overload—so I take it off. The right hearing aid, which has an input limiter, doesn’t overload, but because it is limiting, there are no dynamics coming through it. For example, there is no percussiveness to the drums. This is not so bad, because my now-unaided left ear picks up the percussive snare drum beats. But I can’t hear the cymbals or the hi-hat, because the right hearing aid does not have enough gain in the 4-8 kHz band, especially when the input limiter is kicking everything way down. So I make do, until the guys tell me I’m playing too loud, because I cannot hear the mid range and highs of my electric guitar very well and I’ve turned my guitar up to hear it in balance with the now-pounding bass. And then the levels creep up to 105 dB or so—at which point my other hearing aid begins to distort—so I take that one off. At this point, I’m just trying to compensate and adjust—not really hearing the balance of the music—and certainly not interacting comfortably in creative combination with the other players, especially with the guitar on the opposite side of the stage.

|

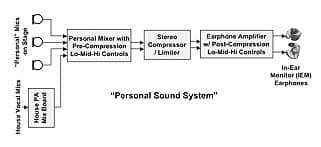

| FIGURE 6. Schematic diagram of “Personal Sound System” used by the author to assist with hearing while performing onstage. See Figure 5 for placement of “personal” mics on stage. |

I haven’t even mentioned that, between songs, the other players will usually discuss what to play next. For me, the leader usually will do his best to remember to signal the letter of the key we’ll be playing in, and I do my best to come in at the right time. If the leader forgets to signal the key to me, which does happen, I often will start playing in a key 1/2-step above what everyone else is playing in—as my pitch perception tends to be “flat.” Ouch! They do let me know when that happens, and I, with a red face, correct the key immediately.

So Music Night, as you may guess, can be stressful. Yet I keep going to be a part of it. I surmise that people like my playing enough to put up with the “miscues” attributable to my hearing loss. Good thing these are not paid gigs, and I earn my keep by doing audiology-related R&D and consulting!

A Makeshift “Personal Sound System”

One thing I’ve tried, with limited success, is to set up my own “personal sound system” using in-ear monitors (IEMs). I’ve found that, if I set up three mics, one for stage-right, one for stage-center, and one for stage-left, plus taking a direct feed from the vocalists’ mics via the house PA system (refer to Figures 5 and 6), I can pick up all of the musical elements with sufficient separation to balance and hear everything pretty well. I feed these sources to a mixer and then feed the stereo mix, via an outboard stereo compressor, to an earphone amplifier, which in turn feeds a pair of IEM earphones available from Westone or Shure. (Essentially, these are insert earphones capable of higher undistorted outputs than are typical of “consumer-type” insert earphones.) The mixer and earphone amplifier each have tone controls (bass, mid, and treble) that provide frequency shaping to accommodate the configuration of my hearing loss, and the compressor allows for dynamic-range compression and upper-level output limiting.

My experience with this kind of personal sound system has been that I can hear and perform comfortably! Unfortunately, this kind of system is not normally a viable solution, because: 1) It is complicated and time-consuming to set up; 2) The additional microphones usually take up too much space onstage, making it difficult for the musicians to find a comfortable place to stand; and 3) There is no accommodation for picking up “off-mic” banter and instructions between songs. For this last issue, I’ve tried using a wireless lapel mic worn by the stage leader, but this has so far proven unsatisfactory.

Ideas as Yet Untried

One thing I’ve been thinking of trying, assuming I can get some hearing aids that don’t distort with 100-105 dB rms input SPLs, is to use a directional microphone on one ear, to pick up the guitar amp from the other side of the stage, while using an omni mic on the other ear, to pick up my own instrument amp and other nearby sources. The same setup might prove effective in picking up between-song banter and instructions. I don’t know whether a first-order directional mic (such as commonly available in hearing aids) will be sufficiently directional for the purpose. I’m thinking that, if available, either a Link-it™ (from Etymotic Research), coupled to the telecoil input of a BTE, or a Triano with a TriMic (from Siemens) might work best for the directional side, as these microphones provide a high order of directivity.

|

| Perceptual Considerations in Designing and Fitting Hearing Aids for Music, by Frank Russo, PhD. March 2006 HR.Hearing Conservation in Schools of Music: The UNT Model, by Kris Chesky, PhD. March 2006 HR.

Myths that Discourage Improvements in Hearing Aid Design, by Mead C. Killion, PhD. January 2004 HR. |

Another idea might be to use a “modified” 3D Active Ambient™ IEM System (from Sensaphonics).9 The 3D Active Ambient IEM System is designed to deliver high-fidelity wireless signals from stage sound systems, while providing high-fidelity ambient sound to the user via binaural, field-corrected, head-worn microphones. The system incorporates selectable levels of output limiting to protect the user’s ears. The “modifications” I have in mind are that the frequency response would be modified to accommodate audiometric configurations, perhaps with selectable TILL processing,10 and selectable omni or directional microphones to be integrated on either side of the head-worn binaural ambient mic system.

Ah, to dream. I mention these “tried-and-failed” and “untried” solutions simply to get the reader thinking of other possible solutions, hoping that eventually something that works may come to light.

Final Thoughts

In concluding, I want just to echo one important request to the hearing aid industry, made by Rick Ledbetter in his essay available online this month. In the last 5 years or so, I’ve had the “unusual privilege” to be able to program three sets of my own digital hearing aids. I have found that “getting it right” can require hours of tweaking over a considerable span of time. It’s a good thing that the companies that have provided me with programmable hearing aids also have provided me with the necessary programming software and interface hardware; otherwise, I’d have become a real nuisance to some clinicians who have otherwise remained my friends.

Professional musicians understand what they need to hear, and today’s tech-savvy musicians can learn to operate hearing aid fitting software, usually on their own. It is my hope that manufacturers routinely make available to musicians the programming software and interface hardware necessary for them to take control of their auditory lives.

References

- Chasin M. Music and hearing aids. Hear Jour. 2003;56(7):36-41.

- Chasin M. Hearing aids and musicians. Hearing Review. 2006;13(3):24-31.

- Mueller HG, Killion MC. An easy method for calculating the articulation index. Hear Jour. 1990;43(9):14-17.

- Olson HF. Music, Physics and Engineering. New York: Dover; 1967.

- Moore BCJ. An Introduction to the Psychology of Hearing. 2nd ed. London: Academic Press; 1982.

- Wikipedia. Vocal range. Available at: en.wikipedia.org/wiki/Vocal_range. Accessed January15, 2009.

- Kent RD, Read C. The Acoustic Analysis of Speech. San Diego: Singular Publishing; 1992.

- Helmholtz H. On the Sensations of Tone. New York: Dover; 1954.

- Santucci M. Please welcome onstage…personal in-the-ear monitoring. Hearing Review. 2006;13(3):60-67.

- Killion MC, Staab WJ, Preves DA. Classifying automatic signal processors. Hear Instrum. 1990;41(8):24-26.

Correspondence can be addressed to HR at [email protected] or Larry Revit at.

Citation for this article:

Revit LJ. What’s so special about music? Hearing Review. 2009;16(2):12-19.